Overview:

Initially designed for rendering graphics, Graphics Processing Units (GPUs) have evolved to handle a wide range of computational tasks, making them indispensable in fields such as machine learning, artificial intelligence, scientific simulations, and video rendering. So, to comprehend what is a GPU fully, we need to understand its key advancements in parallelism, memory optimization, and specialized programming languages like CUDA and OpenCL that have enabled GPUs to efficiently process vast amounts of data, significantly accelerating complex computations and enhancing performance in various applications. The benefits of GPUs include high processing power, quicker execution of complex workloads, scalability, cost-effectiveness, enhanced visual capabilities, and improved computational accuracy.

Despite their advantages, GPUs also present several challenges. They are less effective for sequential tasks and require significant investment in terms of cost and programming expertise. Debugging GPU-accelerated code is more complex, and data transfer between CPU and GPU can introduce overhead. Compatibility issues and vendor lock-in are additional concerns. Looking to the future, GPUs are poised to play a critical role in emerging technologies such as edge computing, autonomous vehicles, and augmented reality. They will continue to drive advancements in AI and ML, improve energy efficiency, and open up new use cases and applications. As GPU technology continues to evolve, it will further expand its impact across various industries, shaping the future of computing and enabling innovative solutions to complex problems.

Contents:

- what is a GPU

- Key Advancements in the GPU Field

- Key Application Areas of GPUs

- Key Benefits Offered by GPUs

- Key Challenges Related to GPUs

- Potential Future Impact of GPUs

- Summing Up

So, what is a GPU:

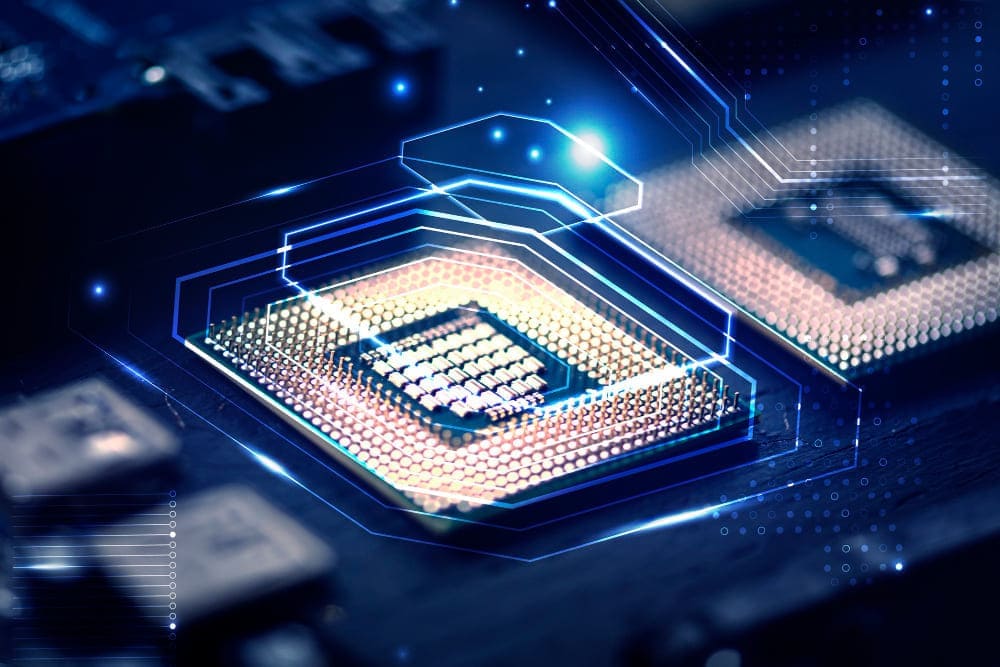

A GPU (Graphics Processing Unit) is a specialized computer chip designed to handle intricate computations and process vast amounts of data with exceptional speed and efficiency. Originally developed for rendering images and graphics in real-time applications such as video games, GPUs have evolved into powerful tools that excel in parallel computing tasks. Their architecture, comprising thousands of cores, enables concurrent execution of multiple calculations, which is crucial for accelerating tasks requiring intensive mathematical operations. This parallel processing capability makes GPUs particularly valuable in fields like machine learning, where they play a pivotal role in training complex neural networks by processing large datasets and performing matrix operations swiftly.

In scientific simulations, GPUs enhance computational accuracy and speed by enabling rapid processing of simulations related to weather forecasting, fluid dynamics, molecular modeling, and more. Their ability to handle complex calculations quickly has also revolutionized industries like video rendering and editing, where GPUs facilitate faster production times and enhance the quality of visual effects in films, animations, and virtual reality environments. Across various sectors, from healthcare and finance to entertainment and research, GPUs continue to drive innovation by enabling faster data processing, advanced simulations, and enhanced computational capabilities that were previously unattainable with traditional CPU-based systems.

Key Advancements in the GPU Field:

Graphics Processing Units (GPUs) have seen significant advancements over the years, positioning themselves as critical components not only in graphics rendering but also in high-performance computing and artificial intelligence. Below are some of the key advancements in the GPU field:

1. Parallelism:

GPUs are inherently designed to handle parallel computations. Unlike Central Processing Units (CPUs) which are optimized for sequential task processing, GPUs can process thousands of threads simultaneously. This capability makes them particularly well-suited for tasks that require massive amounts of data processing, such as:

Graphics Rendering: GPUs can render complex graphics scenes by performing millions of calculations in parallel, which is essential for video games and simulations.

Scientific Simulations: Tasks like weather forecasting, molecular modeling, and fluid dynamics simulations benefit from the parallel processing power of GPUs.

Machine Learning: Training deep neural networks involves performing numerous matrix multiplications and additions, which are highly parallelizable and thus accelerated by GPUs.

2. Memory Optimization:

GPUs come equipped with their own dedicated memory, known as video memory or VRAM, which is optimized for handling large datasets efficiently. Key features of GPU memory include:

High Bandwidth: GPU memory offers significantly higher bandwidth compared to traditional CPU memory, allowing for faster data transfer rates. For example, GDDR6 and HBM2 memory technologies provide the necessary speed for high-resolution graphics and large-scale computations.

Low Latency: The memory access latency is minimized, enabling GPUs to retrieve and process data quickly. This is crucial for real-time applications such as gaming and live video processing.

Large Capacity: Modern GPUs have VRAM capacities ranging from 4GB to 48GB or more, allowing them to handle large models and datasets required in deep learning and data analysis tasks.

3. Programming Languages and APIs:

Several specialized programming languages and APIs have been developed to harness the full potential of GPUs, making it easier for developers to write code that runs efficiently on these devices. Key examples include:

CUDA (Compute Unified Device Architecture): Developed by NVIDIA, CUDA is a parallel computing platform and programming model that allows developers to use C, C++, and Fortran for coding GPUs. It provides libraries and tools to optimize performance in various applications like linear algebra, signal processing, and machine learning.

OpenCL (Open Computing Language): An open standard for cross-platform parallel programming, OpenCL supports a wide range of devices, including GPUs from different manufacturers. It allows developers to write code that can be executed on any compatible hardware, promoting portability and flexibility.

DirectX and Vulkan: These APIs are primarily used in graphics rendering. DirectX, developed by Microsoft, and Vulkan, maintained by the Khronos Group, provide low-level access to GPU hardware, enabling high-performance graphics and compute applications. They are widely used in game development and real-time rendering applications.

4. Enhanced Architecture:

Modern GPUs incorporate advanced architectural features that further enhance their performance and efficiency:

Tensor Cores: Specialized cores designed to accelerate deep learning tasks. Tensor cores can perform mixed-precision matrix multiplications and additions, which are fundamental operations in neural network training and inference.

Ray Tracing Cores: Dedicated cores for real-time ray tracing, which simulates the physical behavior of light to produce realistic lighting, shadows, and reflections in graphics applications.

Multi-GPU Scalability: Technologies like NVIDIA’s NVLink and AMD’s Infinity Fabric allow multiple GPUs to work together seamlessly, providing a scalable solution for supercomputing tasks.

5. Software Ecosystem:

The GPU software ecosystem has also matured, providing robust tools and libraries to support various applications:

Deep Learning Frameworks: Popular frameworks like TensorFlow, PyTorch, and Caffe are optimized for GPU acceleration, providing pre-built functions and models that leverage GPU power for faster training and inference.

Simulation and Modeling Tools: Software like MATLAB and ANSYS offer GPU acceleration options to speed up complex simulations and analyses.

Game Engines: Engines like Unreal Engine and Unity have integrated support f\r GPU features, enabling developers to create highly detailed and responsive games.

Key Application Areas of GPUs:

1. Machine Learning and Artificial Intelligence:

GPUs have become indispensable in the field of machine learning and artificial intelligence (AI) due to their ability to accelerate the training and inference processes of neural networks.

Training Neural Networks: Training deep learning models involves performing numerous computations on large datasets, which can be parallelized effectively on GPUs. This significantly reduces the time required to train models. For example, GPUs are used to train models like OpenAI’s GPT-4 and Google’s BERT, which require processing vast amounts of text data.

Processing Large Datasets: GPUs are adept at handling large datasets used in AI applications, enabling faster data processing and real-time analytics. Companies like NVIDIA provide specialized libraries such as cuDNN and TensorRT to optimize deep learning tasks on GPUs.

2. Scientific Simulations:

In scientific research, GPUs are used to accelerate complex simulations, improving both speed and accuracy.

Climate Modeling: GPUs help in simulating climate patterns and predicting weather changes by processing vast amounts of environmental data. This is critical for understanding climate change and planning for natural disasters.

Medical Imaging: Techniques like MRI and CT scans generate large volumes of data that need rapid processing. GPUs enable real-time image reconstruction and analysis, aiding in quicker diagnosis and treatment planning.

Oil Exploration: In the energy sector, GPUs are used to process seismic data for oil and gas exploration. This speeds up the analysis of subsurface structures, improving the accuracy and efficiency of locating resources.

3. Video Rendering and Graphics:

GPUs are essential in the fields of video rendering and graphics processing, powering applications from entertainment to professional design.

Video Games: GPUs render high-quality graphics in real-time, allowing for immersive gaming experiences. Technologies like real-time ray tracing, supported by modern GPUs, enhance visual realism by accurately simulating light behavior.

Animations: Animation studios use GPUs to render complex scenes and effects. For instance, movies from studios like Pixar and DreamWorks rely on GPU-accelerated rendering to produce detailed animations.

Virtual Reality (VR): VR applications require high frame rates and low latency to provide a seamless experience. GPUs handle the intense graphical computations needed for VR, enabling applications in gaming, training, and simulations.

4. Cloud Computing:

GPUs are increasingly being integrated into cloud computing environments to accelerate various computational tasks, offering scalable and efficient solutions.

Video Transcoding: In cloud services, GPUs are used to transcode videos into different formats and resolutions quickly. This is crucial for content delivery networks (CDNs) like Netflix and YouTube, which need to stream video content to millions of users simultaneously.

AI and ML Services: Cloud providers like AWS, Google Cloud, and Microsoft Azure offer GPU instances for machine learning and AI workloads. This allows businesses to leverage powerful GPUs without investing in physical hardware, facilitating scalable and cost-effective solutions.

Data Analytics: GPUs accelerate big data analytics by processing large datasets faster than traditional CPU-based systems. Tools like RAPIDS leverage GPU power to speed up data processing tasks in environments such as Apache Spark and Dask.

5. Financial Modeling and Risk Analysis:

GPUs are increasingly being used in the financial sector to enhance modeling and risk analysis.

High-Frequency Trading: In high-frequency trading, milliseconds matter. GPUs process financial transactions and market data at incredibly high speeds, allowing firms to execute trades more rapidly and efficiently.

Risk Management: Financial institutions use GPUs to run complex simulations and risk models, such as Monte Carlo simulations, which require significant computational power. This helps in better forecasting and managing financial risks.

6. Genomics and Bioinformatics:

The field of genomics and bioinformatics benefits immensely from the computational power of GPUs.

Genome Sequencing: GPUs accelerate the process of sequencing genomes, which involves comparing and analyzing large sequences of DNA. This enables faster and more cost-effective genomic research and personalized medicine.

Protein Folding: Understanding protein structures and how they fold is crucial in drug discovery and development. GPUs power the simulations that predict protein folding, helping researchers design effective drugs.

7. Autonomous Vehicles:

GPUs play a critical role in the development and operation of autonomous vehicles.

Real-Time Processing: Autonomous vehicles require real-time data processing from various sensors, including cameras, LIDAR, and radar. GPUs handle the simultaneous processing of this data to make instantaneous driving decisions.

Training Driving Models: The development of self-driving algorithms involves training complex models on large datasets of driving scenarios. GPUs accelerate this training process, allowing for more rapid development and testing of autonomous systems.

Key Benefits Offered by GPUs:

1. High Processing Power:

GPUs are designed with thousands of small processing cores that can perform tasks concurrently. This architecture allows them to handle a vast number of calculations simultaneously, making them exceptionally powerful for parallel processing.

Parallelism: Each core can execute instructions independently, enabling the GPU to process multiple data streams at once. This is particularly beneficial for applications such as graphics rendering, scientific simulations, and machine learning, where tasks can be divided into smaller parallelizable units.

Example:

In graphics rendering, GPUs can process pixels or vertices simultaneously, significantly speeding up the rendering of complex scenes in video games and simulations.

2. Quicker Execution of Complex Workloads:

GPUs are optimized for executing complex workloads faster than traditional CPUs, which is crucial for applications where time is a critical factor.

Speed: The ability of GPUs to perform numerous operations concurrently results in quicker processing times for complex tasks. This makes GPUs ideal for real-time applications.

Examples:

Medical Imaging: In medical imaging, GPUs accelerate the processing of large image datasets, enabling real-time analysis and diagnostics. Techniques such as MRI and CT scans benefit from the high-speed data processing capabilities of GPUs.

Financial Trading: High-frequency trading platforms use GPUs to analyze market data and execute trades in milliseconds. The rapid processing power of GPUs allows traders to respond to market changes almost instantaneously, providing a competitive edge.

3. High Scalability:

GPU computing solutions are highly scalable, which means that systems can be easily expanded by adding more GPUs or GPU-accelerated clusters to increase processing power.

Scalability: Administrators can enhance the computational capabilities of a system without significant redesign or overhaul. This scalability makes GPUs a flexible solution for growing computational needs.

Cluster Computing: In data centers and cloud environments, multiple GPUs can be combined to form powerful clusters. These clusters can tackle large-scale problems in scientific research, artificial intelligence, and big data analytics.

Example:

Research institutions often use GPU clusters to conduct simulations and analyses that would be infeasible with a single GPU or a CPU-based system alone.

4. Cost-Effectiveness:

GPUs provide a cost-effective alternative to achieving high computational power compared to traditional CPU-based clusters.

Efficiency: GPUs are more energy-efficient, consuming less power while delivering superior performance. This leads to lower operational costs, particularly in environments with extensive computational demands.

Hardware Requirements: To reach desired processing goals, fewer GPUs are needed compared to an equivalent CPU-based setup. This reduces the overall hardware investment and maintenance costs.

Example:

In cloud computing, services like Amazon Web Services (AWS) and Google Cloud Platform (GCP) offer GPU instances that provide high-performance computing at a fraction of the cost of CPU-only instances. Businesses can leverage these GPU instances for intensive workloads like machine learning training and large-scale simulations without significant capital expenditure.

5. Enhanced Visual and Graphics Capabilities:

GPUs are specifically designed to handle graphics and visual data, making them essential for applications that require high-quality visual output.

Real-Time Rendering: GPUs can render high-quality graphics in real-time, which is critical for gaming, virtual reality, and simulations. Technologies like real-time ray tracing enable highly realistic lighting, shadows, and reflections.

Professional Graphics Work: Industries such as film, animation, and design rely on GPUs for rendering detailed and complex visual effects. Software like Adobe Premiere Pro and Autodesk Maya utilize GPU acceleration to enhance performance and reduce rendering times.

Example:

The use of NVIDIA RTX GPUs in the game development process allows for real-time ray tracing, providing gamers with lifelike graphics and immersive experiences. In film production, movies like “Toy Story 4” and “Avengers: Endgame” have utilized GPU-accelerated rendering to create stunning visual effects and animations.

6. Improved Computational Accuracy:

GPUs can enhance the accuracy of computational tasks through specialized cores and architectures designed for precise calculations.

Scientific Computing: In scientific research, precise calculations are paramount. GPUs offer enhanced floating-point precision and specialized cores like tensor cores, which improve the accuracy and speed of complex mathematical computations.

Deep Learning: In deep learning, tensor cores in GPUs accelerate the training of neural networks by performing mixed-precision calculations, balancing speed and accuracy to achieve optimal results.

Example:

In the field of astrophysics, GPUs are used to simulate and analyze the behavior of galaxies and black holes with high precision, providing insights that would be difficult to obtain with less accurate computational methods. In deep learning, models like AlphaGo have leveraged the computational accuracy of GPUs to master complex games like Go.

7. Versatility and Adaptability:

GPUs are versatile and can be adapted to a wide range of applications beyond their traditional use in graphics rendering.

General-Purpose Computing: Through programming frameworks like CUDA and OpenCL, GPUs can be used for general-purpose computing tasks (GPGPU). This allows them to accelerate a variety of workloads, from scientific simulations to data analysis.

Artificial Intelligence: GPUs are not only used for training AI models but also for real-time inference, where they process inputs and generate outputs quickly. This is crucial for applications such as autonomous vehicles, voice recognition, and image processing.

Example:

In healthcare, GPUs are used to accelerate drug discovery by simulating molecular interactions at high speed, which helps in identifying potential drug candidates more quickly. In autonomous driving, companies like Tesla and Waymo use GPUs to process data from sensors and make real-time driving decisions, ensuring safety and efficiency on the roads.

Key Challenges Related to GPUs:

While Graphics Processing Units (GPUs) offer numerous benefits, they also present several challenges that can limit their effectiveness in certain scenarios. Below, we discuss these challenges in detail and provide examples to illustrate each point.

1. Workload Specialization:

Not all workloads benefit from GPU acceleration, particularly those that are heavily sequential or require extensive branching.

Sequential Processing: GPUs excel at parallel processing but are less effective for tasks that need to be executed in a specific sequence. This can limit their use in applications that rely on sequential logic.

Branching: Workloads with complex branching (e.g., if-else conditions) can lead to inefficiencies in GPU execution.

Example:

Certain database operations and some algorithms in computational physics that require heavy sequential processing may not see significant performance improvements when run on GPUs compared to CPUs.

2. High Cost:

High-performance GPUs are expensive to set up and maintain, making them less accessible to some organizations.

Initial Investment: The cost of purchasing high-end GPUs can be prohibitive for small businesses or research institutions with limited budgets.

Maintenance: Maintaining and upgrading GPU infrastructure can also be costly, particularly for on-premises setups.

Example:

Building a GPU cluster for deep learning research can cost hundreds of thousands of dollars, which may not be feasible for all academic institutions or small companies.

3. Programming Complexity:

Writing code for GPUs is more complex than programming for CPUs, requiring developers to understand parallel programming concepts and be familiar with GPU-specific languages and libraries.

Parallel Programming: Developers need to understand how to break down tasks into parallelizable units and manage synchronization between threads.

Specialized Languages: Languages like CUDA (for NVIDIA GPUs) and OpenCL require additional learning and expertise.

Example:

A software engineer experienced in traditional CPU programming might find it challenging to optimize code for GPU execution, requiring significant time and training to become proficient.

4. Debugging Issues:

Debugging GPU-accelerated code is more complex than solving bugs in CPU code, often requiring specialized tools to identify and resolve issues.

Complexity: The parallel nature of GPU code can make it difficult to pinpoint errors, especially those that arise from race conditions or memory access issues.

Specialized Tools: Tools like NVIDIA Nsight or AMD CodeXL are needed for debugging, which may have a steeper learning curve.

Example:

A developer working on a machine learning application may spend considerable time debugging issues related to memory access patterns or thread synchronization, which are less common in CPU programming.

5. Data Transfer Overhead:

Moving data between the CPU and GPU often introduces overhead when dealing with large datasets, requiring careful optimization of memory usage.

Latency: Data transfer between the CPU and GPU can be slow, creating bottlenecks that reduce overall performance gains.

Memory Management: Efficient memory management is crucial to minimize data transfer times and optimize performance.

Example:

In a scientific simulation, transferring large datasets from the CPU to the GPU for processing and then back again can negate some of the speed advantages of GPU computation, necessitating careful data management strategies.

6. Compatibility Issues:

Not all applications and libraries support GPU acceleration, requiring developers to adapt or rewrite code to ensure compatibility.

Limited Support: Some software and legacy systems do not support GPU acceleration, limiting the ability to leverage GPU power.

Adaptation: Developers may need to rewrite significant portions of code to make use of GPU capabilities.

Example:

An organization using a legacy financial modeling application may need to invest substantial effort in rewriting the application to benefit from GPU acceleration, which can be resource-intensive and time-consuming.

7. Vendor Lock-in Concerns:

Different vendors have their own proprietary technologies and libraries for GPU computing, which can lead to vendor lock-in problems.

Proprietary Technologies: Each GPU vendor (e.g., NVIDIA, AMD) has its own set of tools, libraries, and technologies, which can limit flexibility and portability.

Vendor Dependence: Relying heavily on a specific vendor’s ecosystem can make it difficult to switch to other solutions in the future.

Example:

A company that has developed its machine learning models using NVIDIA’s CUDA framework might find it challenging to switch to AMD GPUs without significant code rewrites and optimizations, potentially increasing costs and effort.

Potential Future Impact of GPUs:

1. Increased Adoption in Emerging Technologies:

GPUs are expected to play a crucial role in several emerging technologies, significantly enhancing their capabilities and performance.

Edge Computing: GPUs can bring powerful computing closer to data sources, reducing latency and enabling real-time data processing. This is particularly important for applications requiring immediate responses, such as industrial automation and smart cities.

Autonomous Vehicles: GPUs are essential for processing the vast amounts of data from sensors and cameras in real-time, enabling the complex computations needed for navigation, obstacle detection, and decision-making in autonomous driving.

Augmented Reality (AR): The rendering and processing power of GPUs can provide smoother and more immersive AR experiences, enhancing applications in gaming, education, and industrial training.

Example:

Companies like NVIDIA are developing specialized GPUs for edge computing devices, which can be used in smart home devices, retail analytics, and remote monitoring systems.

2. Advancements in Artificial Intelligence and Machine Learning:

GPUs will continue to drive significant advancements in AI and ML, enabling more sophisticated applications and improved performance.

Enhanced Training: The parallel processing capabilities of GPUs make them ideal for training complex neural networks faster and more efficiently, allowing for the development of advanced AI models.

Real-Time Inference: GPUs can process AI inference tasks in real-time, enabling applications such as real-time language translation, personalized recommendations, and dynamic content generation.

Example:

Deep learning frameworks like TensorFlow and PyTorch are optimized to leverage GPU acceleration, resulting in faster training times for models used in natural language processing, image recognition, and autonomous systems.

3. Improved Energy Efficiency:

GPUs are inherently more energy-efficient than other methods of computation, which will become increasingly important as concerns about energy consumption and sustainability grow.

Energy-Efficient Design: Modern GPUs are designed to deliver high performance while consuming less power compared to traditional CPU-based systems, making them more suitable for large-scale deployments.

Sustainability: As data centers and computing needs grow, the energy efficiency of GPUs will contribute to reducing the overall carbon footprint of IT infrastructure.

Example:

Data centers utilizing GPUs for parallel processing tasks can achieve higher computational throughput with lower energy consumption, leading to cost savings and reduced environmental impact.

4. New Use Cases and Applications:

As GPU technology advances, new use cases and applications will emerge, further expanding the scope of GPU computing.

Healthcare: GPUs can be used for real-time analysis of medical images, personalized medicine through genetic data analysis, and simulating complex biological processes.

Financial Services: High-performance GPUs can accelerate risk analysis, fraud detection, and algorithmic trading, providing faster insights and responses in financial markets.

Entertainment: Beyond gaming, GPUs can enhance content creation, video editing, and virtual reality experiences, providing high-quality visual effects and interactive environments.

Example:

In healthcare, GPUs are being used to power advanced diagnostic tools that can analyze CT scans and MRIs more quickly and accurately, leading to faster diagnoses and better patient outcomes.

5. Enhanced Cybersecurity:

GPUs can significantly enhance cybersecurity measures by accelerating data processing and enabling advanced analytical techniques.

Threat Detection: GPUs can quickly analyze large volumes of network traffic and user data to detect anomalies and potential security threats in real-time.

Encryption and Decryption: The parallel processing power of GPUs can accelerate cryptographic algorithms, making data encryption and decryption processes faster and more efficient.

Example:

Security companies can use GPU-accelerated systems to enhance the performance of intrusion detection systems (IDS) and malware analysis tools, providing faster and more reliable protection against cyber threats.

6. Advanced Scientific Research:

GPUs are expected to continue driving advancements in scientific research by enabling more complex simulations and data analysis.

Climate Modeling: GPUs can process vast amounts of climate data to create more accurate and detailed climate models, aiding in the prediction and understanding of climate change impacts.

Genomics: In genomics, GPUs can accelerate the processing of genetic data, enabling faster analysis and facilitating advancements in personalized medicine and disease research.

Example:

Researchers at institutions like CERN use GPUs to analyze the massive data sets generated by particle collisions in the Large Hadron Collider, helping to uncover new particles and understand fundamental physics.

7. Enhanced Human-Computer Interaction:

GPUs will play a crucial role in advancing human-computer interaction (HCI) technologies, making interfaces more intuitive and responsive.

Voice Recognition: GPUs can process voice commands more quickly, improving the responsiveness and accuracy of voice-controlled systems.

Gesture Recognition: In combination with machine learning, GPUs can enhance gesture recognition systems, enabling more natural and intuitive ways to interact with technology.

Example:

Devices like smart speakers and virtual assistants (e.g., Amazon Alexa, Google Assistant) can leverage GPU acceleration to provide faster and more accurate responses to user queries.

8. IoT and Smart Devices:

GPUs will increasingly be integrated into Internet of Things (IoT) and smart devices, enhancing their capabilities and efficiency.

Data Processing: IoT devices equipped with GPUs can process data locally, reducing the need for data transmission to cloud servers and enabling real-time decision-making.

Smart Homes: In smart home environments, GPUs can enhance the performance of devices like smart cameras, thermostats, and home assistants, providing more seamless and efficient automation.

Example:

Smart surveillance cameras can use GPUs to perform real-time video analysis, such as facial recognition and motion detection, directly on the device, enhancing security and privacy.

9. Accelerated Innovation in Robotics:

GPUs will drive innovations in robotics by providing the necessary computational power for complex tasks and real-time processing.

Autonomous Robots: GPUs can enable autonomous robots to process sensory data, make decisions, and navigate environments more effectively.

Industrial Automation: In manufacturing, GPUs can power robotic systems that perform precision tasks, improve quality control, and increase production efficiency.

Example:

Companies like Boston Dynamics use GPUs to power their advanced robotics systems, enabling them to perform complex tasks such as navigating rough terrain and interacting with objects in real-time.

Summing Up:

In conclusion, Graphics Processing Units (GPUs) have become a fundamental component of modern computing, offering unparalleled processing power and versatility. Their ability to handle parallel computations efficiently has transformed numerous fields, from artificial intelligence and scientific research to video rendering and cloud computing. The advancements in GPU technology, including enhanced parallelism, optimized memory, and specialized programming languages, have unlocked new levels of performance and capability, making GPUs indispensable for complex and data-intensive tasks.

However, the adoption of GPUs comes with its set of challenges, including high costs, programming complexity, and data transfer overheads. Despite these hurdles, the future of GPUs looks promising. They are set to play a pivotal role in emerging technologies, drive further advancements in AI and machine learning, and contribute to improved energy efficiency and sustainability. As GPUs continue to evolve, they will open up new possibilities and applications, solidifying their position as a cornerstone of innovative computing solutions and driving progress across various industries.